Preparing for Agentic AI

IIS Executive Insights Cyber Expert: David Piesse, CRO, Cymar

Click here to read David Piesse's bio

View More Articles Like This >

Glossary

Agentic workflows—End-to-end processes that leverage digital agents to execute tasks and make decisions with minimal human oversight.

Agentic AI—The combination of AI agents, automation, knowledge, and process orchestration of dynamic, complex processes with minimal human oversight.

AI agents—Software robots using AI skills to accomplish more complex tasks with the ability for task planning and autonomous decision-making.

AI OS—Artificial intelligence operating system created by the interaction of AI agents.

Artificial general intelligence (AGI)——Also called human‑level intelligence AI, it’s capable of performing cognitively demanding tasks comparable to, or surpassing, that of humans.

Autonomous—The advancement of AI to perform tasks with limited human oversight.

Causal AI—A subfield of AI that understands and models cause-and-effect relationships.

Generative artificial intelligence (GenAI)—A subfield of AI using generative models to produce text, images, videos, or other forms of data. The models learn the underlying patterns and structures of training data and use them to produce new data content based on the input, which often comes in the form of natural language prompts.

AIoT agents—A combination of artificial intelligence, Internet of Things (IoT), and agentic AI.

Knowledge economy—A system of consumption and production based on intellectual capital. It refers to the ability to capitalize on scientific discoveries and applied research.

Large action model (LAM)— Comprehensive models capable of handling a broad range of tasks. They are built to manage complex actions.

Large language model (LLM)—A type of machine learning model designed for natural language processing tasks such as language generation and text handling.

Natural language processing (NLP)—Computational techniques for analysis and synthesis of natural language and speech.

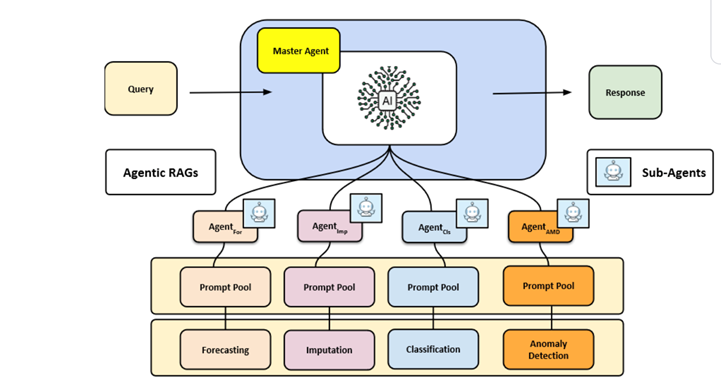

Retrieval augmented generation (RAG)—A master AI agent that delegates tasks to sub-agents to capture multi-modal data based on their speciality. Known as agentic RAG.

Small action model (SAM)—Designed to perform specific, well-defined tasks with high efficiency and low computational requirements.

Small language model (SLM)—Computational models that respond to and generate natural language. Trained to perform specific tasks using fewer resources than LLM.

Synopsis

“Intelligence is the ability to adapt to change”— Stephen Hawking

In the past three years the emergence of generative artificial intelligence (GenAI), has dominated the world’s attention. AI hearkens back to the 1960s to the space industry. GenAI has reached high levels of adoption quickly because of ease of access and usage by the mass market. While useful, GenAI has mathematical limitations that require integration with other AI subfields to fulfil expectations.

The vision of GenAI has plateaued and reached an inflection point. Large language models (LLM) are suitable for content creation but limited to providing actionable intelligence, application integration, and garnering trust, governance, and security. Being based on linear regression[i] they are unable to conduct time series analysis[ii] when data variables are not linear and change needs to be measured over time. This limitation affects the ability to do accurate predictive analytics and needs evolution for meaningful impact, where AI agents, automation, and people combine to perform complex autonomous business workflows.

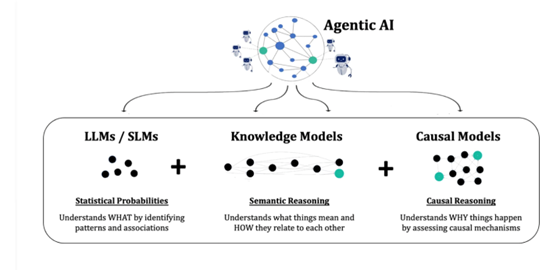

A key component of this evolution is a shift from statistical analysis (correlation) to causality to endow human reasoning to AI agents and to GenAI, linking LLM, causality, and knowledge models into a transformative technology known as agentic AI as shown below.

This paper explains the shift occurring from GenAI to agentic AI, enabling the capture, storage, and reuse of knowledge to address talent shortages, modernize legacy systems, embed insurance, achieve AI return on investment (ROI), improve customer service, and double industry sector growth with evergreen revenue. The human remains firmly in the loop but must adapt to stay relevant and augmentative. A report by PWC [iii] shows agentic AI will have a significant economic impact, contributing between $2.6 to $4.2 annually to global GDP across various industries by 2030. Investments in AI are also expected to triple to $140 Billion by the end of the decade catalysed by agentic AI frameworks.

Overview of AI Agent Market

The world has experienced the emergence of digital chatbots, AI agents, and co-pilots, but is about to undergo change involving an exponential confluence of new business models, technological innovation, and regulatory focus, destined to reshape the business landscape. AI agents are evolving beyond basic task automation as advances in deep learning[iv] and contextual analytics[v] enable systems to anticipate user needs and not only respond to explicit commands. As natural language processing (NLP) and computer vision technologies improve, AI agents can better interpret nuanced data from customer interaction and dynamically adjust actions based on real-time movements in customer behaviour.

It is important to give two definitions as applied to data science.

| Heuristics | Implies proceeding to a solution by trial and error or by rules that are loosely defined. |

| Hypothesis Testing | A method of making predictions about various phenomena and then deciding whether or not the prediction is supported by real- world evidence. This is the science of why things happen. |

The LLM approach uses heuristic methods. Mechanistic interpretability[vi], a technique for analysing mathematics in AI black boxes, shows that LLM generate “bags of heuristics”[vii] rather than efficient predictive analysis and human causal reasoning. This is why LLM learning and training is so long and costly: these massive models have to memorise vast amounts of rules and cannot compress that knowledge like the human mind. To derive all those individual rules, they have to parse every combination of words, images, and other content to train them, needing to see those combinations on a repeatable basis.

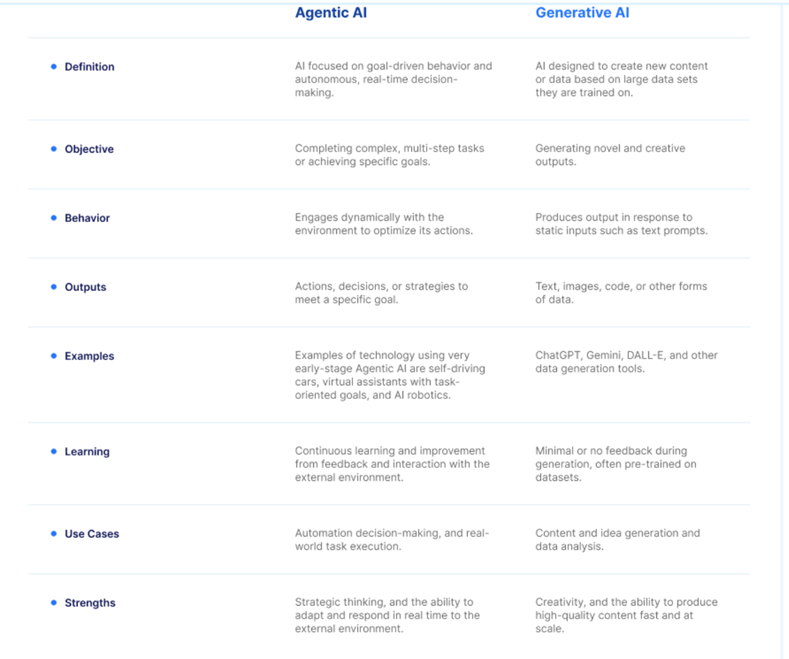

Agentic AI, however, when endowed with causality, is based on principled formalisms, not heuristics, leveraging scientific hypothesis testing to determine causal relationships and their predictive, more accurate, measurable vectors of direction, magnitude, and duration. The following table shows the differences between agentic AI and GenAI, which can be summarized as moving from task-oriented automation to goal-based objectives.

Source: Virtuoso QA[viii]

What is Agentic AI?

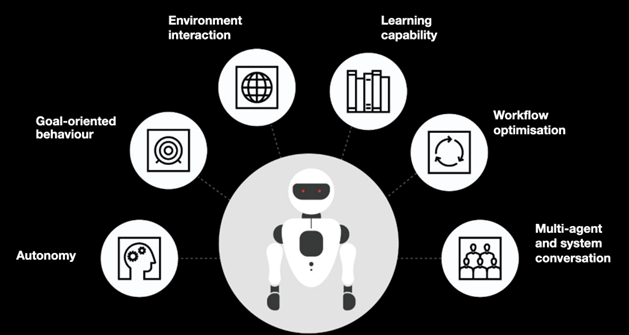

Agentic AI platforms are scalable software, pattern planning, and frameworks that direct AI systems to tackle complex, multi-layered problems by breaking larger tasks into smaller, achievable steps while maintaining focus on the original goal. AI assistants (chatbots) help users execute tasks, retrieve information, and create content via explicit user prompts. AI agents achieve goals by adaption, sensing, and learning based on human and algorithmic feedback loops to make better decisions without direct guidance. Agentic AI systems assist users and organizations in achieving goals that are too complex for a single AI agent and enable multiple agents with their own sets of goals, behaviours, policies, and knowledge to interact in an orchestrated ecosystem.

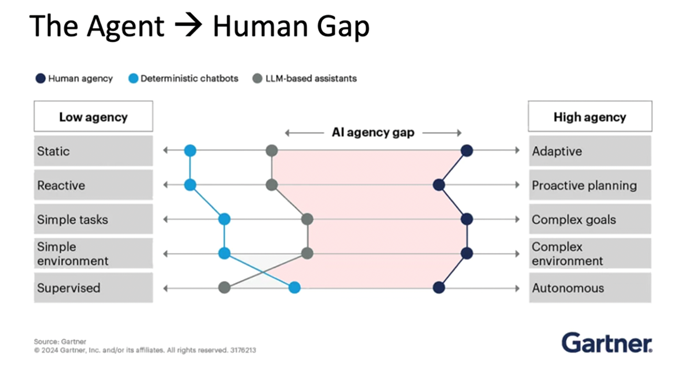

Gartner Group agentic AI research[ix] shows a gap between GenAI and LLM-based assistants and a future promise of AI agents. AI today struggles to understand complex goals, adapt to changing conditions, proactively plan, and act more autonomously, but evolution is rapid.

Source: Gartner

This shows the chatbots and the LLM assistants in GenAI relating to human agents in achieving goals. The gap represents the vacuum to be filled by the early adopters of agentic AI. The trend is to the right to autonomous activity to resolve situations quickly when human intervention is not possible, such as in real-time cyberattack defence.

Feedback Loops (Reinforcement Learning/Data Flywheels)

A key component of AI is a feedback loop which allows machines to learn and improve over time. Feedback comes from various sources, such as human input, data analysis, or other AI systems where the quality/quantity of real-world data is paramount, subjected to privacy concerns and high computational resources. Reinforcement learning techniques[x] are used to optimize the process by providing more targeted feedback to AI systems where an AI agent receives rewards or penalties based on its actions in situ, learning which actions result in positive and negative outcomes. This allows for adjustment of algorithms more effectively than with unsupervised or supervised learning alone and continuously monitors and evaluates AI system performance.

Metrics such as accuracy are used to measure performance and regularly reviewed to identify areas of improvement. This allows early detection and pre-emption of problems as new data becomes available or environmental conditions change. AI guardrails maintain integrity and reliability of the data, ensuring that the outputs are accurate, compliant, and secure.

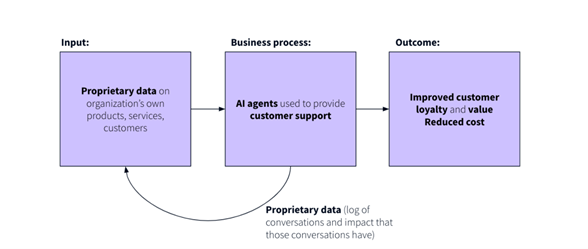

Real-world AI agent systems may have hundreds to thousands of AI agents simultaneously working collectively to automate processes. A data flywheel streamlines AI agent operations and ensures datasets, accurately reflecting real-world scenarios. Building the next generation of agentic AI and GenAI applications using a data flywheel involves rapid iteration and use of institutional data.

Source: Data Flywheel SnowPlow[xi]

Semantic Layer

Agentic AI relies on a consolidated view of data for an organisation to harmonise and interpret raw data accessible by AI agents and end users, providing strategically aligned queries and recommendations. Computational linguistic standards[xi] comprehend cause-and-effect reasoning while keeping human expertise in the loop, creating a unified intelligence layer revealing multi-dimensional relationships across data sources using cognitive augmentation[xii]. It combines machine learning with a contextual understanding to identify patterns invisible to the human eye, explaining the “why” behind trends and suggesting optimal actions while acting as a data integrity guardrail.

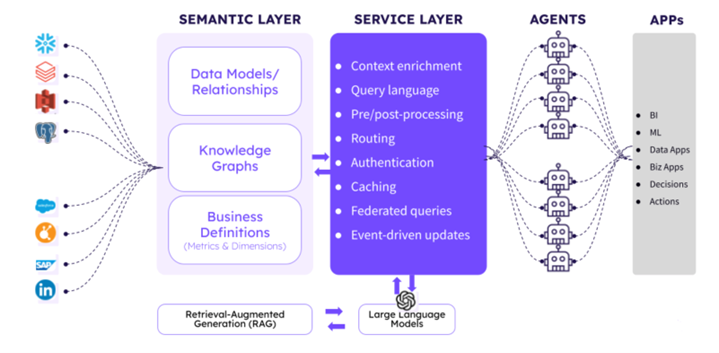

Feeding raw data to an LLM and expecting accurate answers from complex business questions risks generating inaccurate results and hallucinations. The semantic layer contains a knowledge graph that illustrates the relationships between data. By combining domain knowledge and business logic, knowledge graphs provide the essential business context that AI agents need to interpret data accurately and utilise feedback loops and data flywheels to absorb learning over time. The interaction between semantic layers and AI agents is shown below.

Source: Tellius[xiv]

Retrieval augmented generation (RAG) searches through volumes of unstructured data and the semantic layer optimizes queries and retrieval based on predefined data models. Combining RAG with a semantic layer provides AI agents with a comprehensive, consistent, and contextually rich understanding of the data. Governance and control will be required as AI agents dynamically change the semantic layer based on the feedback loops.

Causality in Agentic Systems

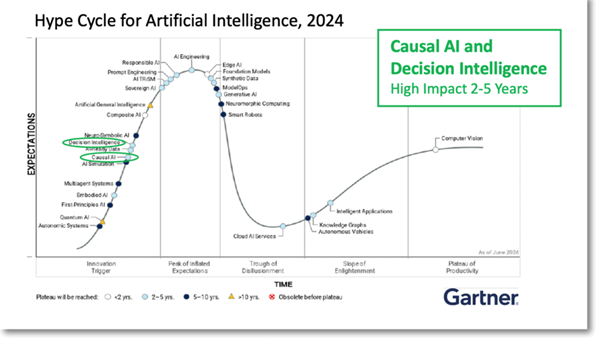

“The next step in AI requires causal AI. A composite AI approach that complements GenAI with Causal AI offers a promising avenue to bring AI to a higher level.”[xv] Gartner shows the importance of the AI causality shift with respect to agentic AI.

Source: Gartner

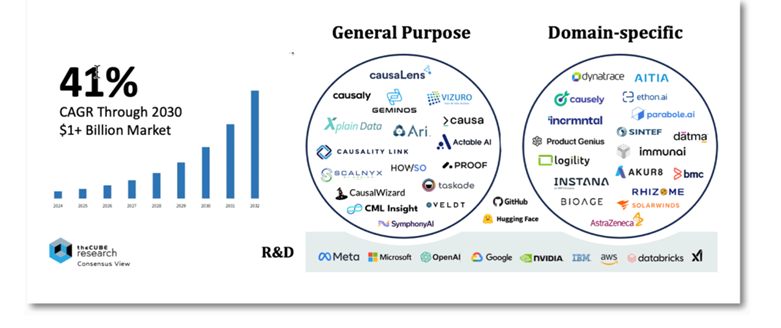

Spending on causality is expected to grow at 41 percent CAGR through 2030[xvi]. A survey of data scientists[xvii] ranked causal AI as the top AI trend as it can reason and make choices like humans, going beyond narrow machine learning predictions. It can be directly integrated into agentic AI decision-making and is a key part of enterprise AI evolution.

Causality enables multiple scenarios to understand cause and effect of events, create predictions, generate content, identify patterns, and isolate anomalies. Today multiple causal AI tools and methodologies are available to data scientists, empowering them to infuse cause-and-effect understanding and reasoning into AI systems and subfields. This closes the gap within LLM limitations and endows agentic AI systems by orchestrating an ecosystem of collaborating AI agents to help enterprises scale and achieve goals.

LLM are based on statistical correlations (probabilities) between an event and its outcome, but correlation does not imply causation, which can lead to incorrect conclusions or bias based on wrong assumptions. Correlation-driven AI systems operate in static environments, relying on observed patterns derived from historical data, assuming these patterns remain stable over time. For AI systems to be effective they must operate in a dynamic environment across time series analysis to adapt and respond to changing conditions and must understand the underlying causes driving data variable relationships.

Predictive analytics must imagine new versions of history to shape and prioritise outcomes positively and show better accuracy of predictions by vectors of magnitude, direction, and duration. An Apple[xviii] study exposed LLM limitations of up to 65 percent decline in accuracy due to unexplainable black-box approaches, delineating the risk of relying on LLM alone for problem-solving without logical reasoning.

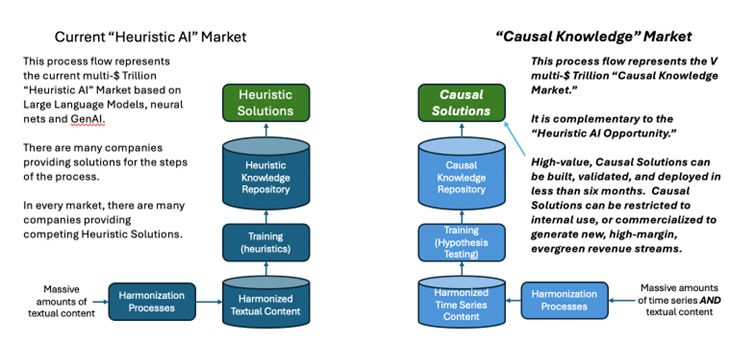

Causal AI is forward looking but also understands statistical probabilities and how they change in relation to events and the use of back testing to prove the scientific hypothesis. Without causality, AI agents only perform well if the future resembles the past, which is not dynamic. The diagram below illustrates the complementary nature of the LLM and causal AI markets.

Source: Vulcain.AI[xvix]

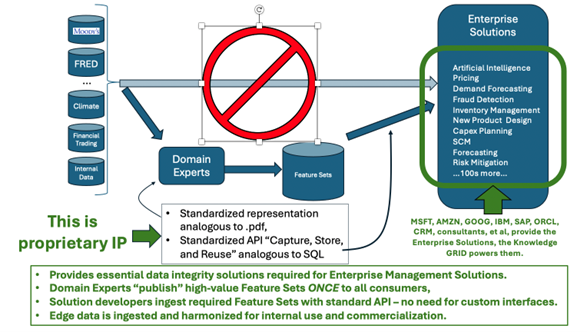

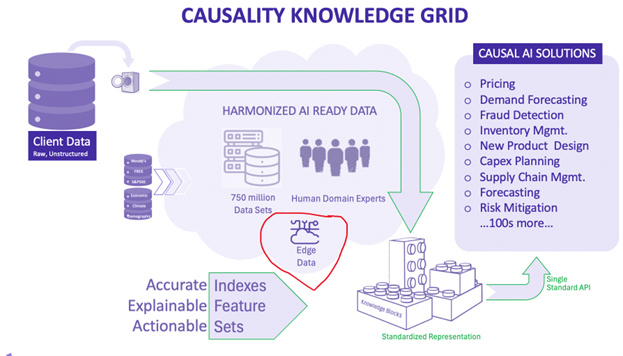

Domain expertise is an essential ingredient to the causal process and subsequently to agentic AI as part of a standardized representation and harmonisation process. As shown below, automation without human domain knowledge intervention is not good practice. The standardized harmonisation, whether from LLM or causality, means any dataset can be added and is immediately available for processing in the model.

In the example below, the vendor IP is essentially an extension of structured query language SQL,[xx] which means easy integration into the process. As we are asking counterfactual questions of the models, the standardised structure of the data query needs to be globally understood hence the focus on the SQL dataset component.

Source: Vulcain.AI

Combining LLM and Causal Reasoning to Understand Agentic AI

Transitioning to an AI-based “Knowledge Economy” is accelerating and estimated to be a $1.8 trillion market by 2030[xxi]. Current market focus is on LLM-based solutions based on neural net technology and led by Google’s “Transformer Architecture”[xxii]. Virtually every commercial enterprise solution can benefit from LLM augmentation.and companies are recognizing value in capturing internal knowledge through LLM solutions and development tools. Combining LLM and endowing causality is a powerful strategy adding the KPIs below.

| Heuristic LLM Models – endowed with - > | Scientific Causal Models |

| Low value | High value |

| Difficult to explain | Explainable |

| Inability to enable optimize responses | Enable predicted optimized results |

| No engineering principles | Engineering principles in any domain |

| Expensive to build in time and money | Smaller cost and quicker to build |

| No a priori knowledge | Performance predictions a priori |

| Difficult to evaluate and validate results | Easy evaluation and validation |

This brings three component parts together into the agentic AI model combining LLMs, knowledge models, and causal models, as shown by the diagram below.

Source: by “theCube Research”[xxiii]

Causal solutions and LLM can be combined into wholesale knowledge platforms where partners benefit from causal solutions integrated into new or existing heuristic (LLM) solutions. A heuristic solution can be integrated into a causal solution by identifying and partnering with key content vendors (Moody’s, S&P, Bloomberg, Refinitiv, FactSet) to build repositories of causal knowledge that can be used as basis for many innovations. This also enables partnerships with major technology vendors (Microsoft, Google, Meta, Oracle, IBM) to integrate causality with heuristic solution development tools. This leverages partners to create highly efficient sales and marketing distribution channels to achieve network effects. This knowledge platform can then be white labelled into enterprises by means of a single API. It can be stated that without:

- Domain expert input, both internal and external vendor content will generate underperforming enterprise solutions.

- Standardized representations, especially with respect to causal relationships, most enterprise solutions are prohibitively expensive to build and maintain.

- Standardized approach to capturing, storing, re-using and monetizing knowledge, organizations are wasting their most precious strategic assets, the tacit knowledge of their employees, and intellectual capital of intangible assets.

To overcome limitations in LLM models, an interim step known as Chain of Thought Prompting (CoT)[xxiv] is deployed to simulate humanlike reasoning by delineating complex tasks into a sequence of logical steps toward a final resolution. In the absence of causality CoT dedicates more time to reasoning and double-checking accuracy than LLM but has longer response times, is more costly, and lacks transparency in terms of explanation. Google DeepMind and Stanford University are working on the Self-taught Reasoner (STaR) method[xxv], to infuse reasoning capabilities into AI models through a process of self-supervised learning. CoT, like LLM, relies on statistical correlations and lack causality.

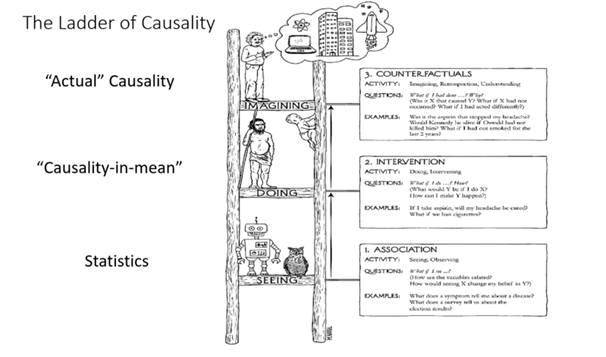

Ladder of Causality[xxvi]

Business sectors have been focused on GenAI but are now moving to a sliding scale with GenAI searches (SQL query) at the lower end of the scale and agentic AI that can really plan and reason at the higher end, which no longer becomes an internet search but interacts with APIs gathering documents. This aligns with the ladder of causality framework that has three rungs of a causal hierarchy, each adding additional information not available to models belonging to a lower rung and each building upon the last:

(1) Observational information

(2) Interventional information

(3) Counterfactual information

Reinforcement learning or feedback loops naturally fall on the interventional rung since AI agents learn about optimal actions by observing outcomes due to their interventions in the system. They cannot, however, use interventional data to answer counterfactual “what if” questions without additional information. Counterfactual questions are purposeful for decision making. Business sectors today are between the first/second rung of the ladder.

Source: HPCC based on Judea Pearl Ladder of Causality

Agentic AI 2025: Market Size, Key Players, and Growth

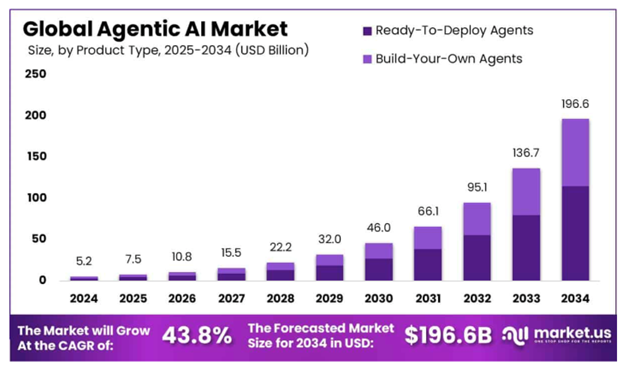

According to Markets and Markets, the global AI market is projected to grow from $214.6 billion in 2024 to $1339.1 billion in 2030 at a CAGR of around 35.7 percent[xxvii]. In alignment the global agentic AI market size isexpected to be worth around $196.6 billion by 2034, from $5.2 billion in 2024, growing at a CAGR of 43.8 percent[xxviii]. North America has a dominant market position with the United States agentic AI market size standing at $1.58 billion in 2024 with CAGR of 43.6 percent[xxix]. Atera have published agentic AI statistics[xxx] and industry quotes.

Source: Marlet.US

The following landscape shows the big technology players in the agentic AI space and the growing vendor base, both horizontally and vertically.

Source: The CubeResearch

The major trends for agentic AI are hyper personalisation, broader ecosystem integration, real-time edge computing and ethical/regulatory issues. The main challenges are data quality/integration, trust/adoption, and maintaining scalability. However, adoption is underway.

Duplex[xxxi] by Google conducts human-like conversations to book appointments, integrated into other Google Assistant services. IBM Watson deployment in healthcare diagnostics[xxxii] improves patient outcomes by providing physicians with rapid, data-driven insights. The Mayo Clinic leverages AI-driven diagnostic tools[xxxiii] to process vast amounts of medical data to enhance the accuracy and speed of diagnoses. Microsoft is a leader in enterprise AI solutions such as Azure Bot Services[xxxiv]. Amazon’s approach to AI agents is represented by Alexa for home automation systems and e-commerce[xxxv]. Pegasystems, with a history of rules implementations, embeds agentic AI into business workflows[xxxvi] to automate complex processes while maintaining compliance and adaptability.

Regionally, European organizations have stringent data privacy laws and regulatory compliance such as GDPR and the EU AI Act 2024-2-25, which fosters a unified cybersecure digital market[xxxvii]. China has a rapid adoption and innovation in consumer-facing AI with emergence of DeepSeek[xxxviii], challenging established global players like OpenAI, Microsoft, and Nvidia. North America is active in AI innovation, with leading-edge research institutions, robust venture capital, and technological experimentation. This leadership is reinforced by strategic geopolitical measures which have restricted access to critical semiconductor and chip technologies for competitors in China and India. In the U.S. Oracle, SoftBank, and OpenAI are investing $500 billion in AI through Project Stargate[xxxix].

Integra’s Ready-to-Deploy AI Agents[xl] captured over 58.5 percent of the market share[xli]. These agents are preconfigured, enabling businesses to implement them rapidly without substantial customization or R&D. AI agents will soon interface seamlessly with blockchain, augmented reality (AR), and 5G networks. While cloud-based SaaS models currently dominate due to their scalability and ease of integration, there is a growing trend toward hybrid deployment models which combine the flexibility of the cloud with the enhanced security and customization offered by on-premises cloud solutions. This evolution is particularly critical in industries with stringent data privacy regulations, such as financial services and healthcare.

Agentic AI and Insurance

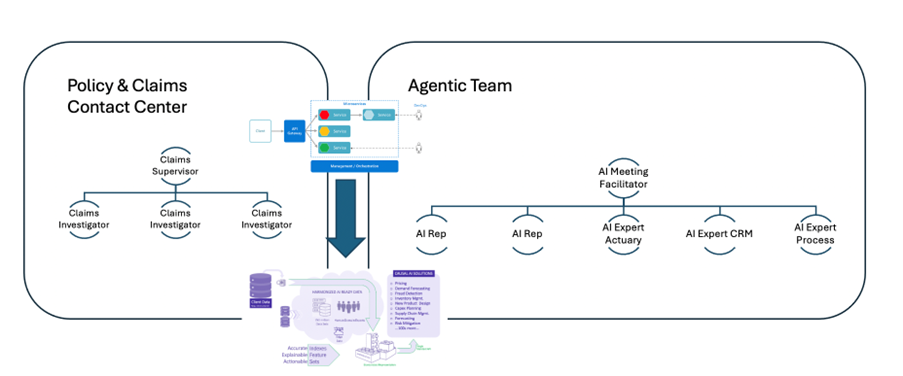

The insurance industry will be deeply impacted by agentic AI. For insurance organizations the biggest risk from AI is being outcompeted by other companies that deploy agentic AI. It is possible for outside players to create an insurance business by recreating an insurance operations team end to end just using digital agents. The diagram below shows a potential hybrid insurer with the human team on the left and the agentic team on the right integrated by a microservices platform which integrates with a causal knowledge platform.

Source: Author (aggregated from web sources)

Agentic team AI agents undertake different tasks across insurance sector roles collaborating with the human team. A digital twin of a human domain expert works with the agentic team as a master agent. AI agents work 24/7 and produce so much output that the master agent is deployed to filter the output for human consumption. A digital software workforce is infinitely scalable so multiple agents that capture, store, and reuse knowledge interact with the subject matter experts, keeping the human in the loop to make final determination. This addresses talent shortage and increases the magnitude of operational capacity.

Regulation may slow progress to find product market fit and regulators need to be part of the holistic solution early in the process. Human knowledge is the ultimate corporate asset with agentic knowledge workers deployed to address growth opportunities. A beneficiary of agentic AI will be underwriting by a process of training and continuous improvement and real-time policy audits. AI can automate pricing with guardrails to minimize human errors, by ensuring that data integrity, maintaining regulatory compliance by explaining results to regulators to maintain responsible AI practices.

Underwriting is paramount to the insurance value chain but has challenges such as data quality, unstructured documents, talent shortage, and an abundance of risk-related rules. Underwriter AI agents will collect information using RAG and agentic AI and ingest these large submissions, breaking down the data, accessing third-party sources, and presenting the human underwriter with information for pricing. Third party administrators (TPAs) or smart managing general agents (MGAs)[xlii] will be early adopters for agentic AI growth to retain knowledge while reducing the cost of outsourcing and removing uncertainty to allow free passage of new embedded and parametric insurance trends.

Embedded Insurance Powered by AI

Insurance coverage can be integrated into the customer purchase of a product or service in one transaction, opening up new distribution channels. AI-powered software platforms will enable embedded insurance products into third-party brands. For smart devices this moves from a “detect and repair” model to a “predict and prevent” approach, shifting from fixing problems post event to mitigating before they happen. Anticipatory insurance[xliii] is a trend where there is enough confidence in the causal prediction that part of a claim can be paid before an event happens, which would be an ultimate game changer in client-centric service.

The business-to-business (B2B) space for small- and medium-sized enterprises (SMEs) will be top-line growth for the embedded channel. By purchasing cyber cover, for example, through embedded offers when buying hardware and software online, SMEs will get cyber coverage at point of sale through multiple touchpoints. Smart MGAs can use AI agentic platforms with multiple AI underwriting agents to distribute insurance products to consumer-facing brands. This aggregates underwriting capacity from multiple insurers, conducting end-to-end digital journeys and interfacing with distributors through customizable API’s , owning and managing the insurance value chain, from underwriting, service operations, data analytics, claims management, product development, and pricing.

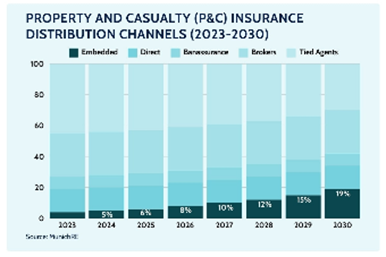

Embedded insurance is a key driver in the agentic AI model, especially as digital agents operate at the cloud edge with smart devices with closer relationships with end users, capturing additional data to aid pricing. The global insurtech market is expected to grow from $8.63 billion in 2024 to $32.47 billion by 2019[xliv], with a CAGR of 30.34 percent, helping to address the global insurance protection gap, projected to reach $1.89 trillion by 2025[xlv]. AI enables insurers to offer hyper-personalised products by analysing vast amounts of data, assessing risks more accurately, and optimising claims processes in real time. The embedded insurance market is expected to have 30 percent of all insurance transactions by 2030[xlvi].

Source: Munich RE

Smart devices allow the original equipment manufacturer (OEM) industry to be at the forefront of innovation, delivering leading-edge products and adding value beyond the initial purchase. OEMs can expand their offerings by integrating embedded insurance. Devices are like people and need to be treated as such in the digital world, which means proper identity and provenance. Peer-to-peer (P2P) insurance presents a new solution to consumers who question traditional insurance. A true P2P network is a mutual group of members who can sell insurance to each other but equally these members could also be devices that could also transact together, and it is possible an AI agent on one device could sell insurance to another device.

Trust in Agentic AI

The Trust Spanning Protocol (TSP)[xlvii] is a project by the LINUX Foundation is designed for multiple autonomous parties that maintain data-driven conditional trust relationships. This will strengthen trust in human and AI agent interactions. Autonomous AI agents, must be separated from their developers and deployers in a trust model because they can act autonomously. Such separation and delegation of agency and control must be built into the foundation by design. TSP allows entities such as AI agents to be authenticated, monitored and controlled, defining accountability, and imposing tight control. Technical platforms must be able to support a trust framework that separates entities in a verifiable manner so that liability can be established without unconditionally trusting the parties when messages are exchanged between endpoints with highly assured trust.

The Convergence of AI Agents and the Internet of Things

The fusion of AI agents and Internet of Things (IoT) devices (AioT), is at the forefront of agentic AI transformation. These device AI agents, whether retail or industrial, learn from data and continuously improve their performance through real-time insights and control, enabling autonomous connected devices to independently decide and execute the best course of action. The agents ingest data via RAG which means IoT networks and endpoints operate and deliver data and insights in real time with a minimum of human intervention.

Reinforcement learning is applied to the process where AI querying tools (SQL) utilise data from IoT devices. Sun Microsystems said in 1984, “the network is the computer,”[xlviii] and an example is motor telematics, where a car is a device on the network with advanced sensors and on-board diagnostics that collect real-time data on driver behaviour, speed, and road conditions, which calculates a driver’s risk profile and can be used for personalized insurance dynamic pricing. Real-time risk assessment is another area where AI is enhancing embedded insurance with IoT devices and intelligent data analytics. By leveraging data from IoT devices like health wearables, sensors, or connected vehicles, insurers can continuously monitor evolving risks, enabling more accurate and timely adjustments to coverage.

Edge Computing and Edge AI

Edge computing processes data on local IoT devices/sensors instead of sending it to a centralised cloud or data centre. With the increasing importance of real-time decision-making, particularly in industrial automation, strategies now pivot toward integrating edge computing capabilities. Local AI agents that stay connected to centralized learning systems are better equipped to operate, as data is processed close to its source, integrating AI algorithms into edge devices without the need for constant connectivity. This is known as edge AI[xlix]. The diagram below shows a causality knowledge grid example and attention should be paid to the red-circled edge data for without robust data integrity, edge data cannot be trusted, pass regulatory muster, or be commercialized.

Source: Vulcain

With edge computing, data processing continues even if the connection to the central cloud is lost. The marriage of industrial IoT with agentic AI/physical AI (robot) systems represents the intersection of AI and advanced robotics interacting with the physical world. Forward-thinking manufacturers are already seeing ROI from these integrated technologies, with benefits ranging from 37 percent reduction in defects to 7 times returns on investment within months[l].

Breakdowns in manufacturing are expensive and industrial IoT sensors powered by AI collect high-quality data across machine networks, identifying equipment needing pre-emptive maintenance. These sensors measure vibration, temperature, and electricity usage to estimate potential future points of failure, enabling manufacturers to schedule maintenance before costly breakdowns occur, including the use of narrative AI [li] and video.

This approach not only reduces downtime but also extends equipment lifespan and optimizes maintenance resources across the factory floor. With industrial IoT networks, quality assurance monitoring can now be done remotely and automatically with real-time alerts. With Edge computing the data never leaves the factory, mitigating a minimal risk of being intercepted by third parties. 5G technology positively impacts the industrial IoT landscape by enhanced speed, low latency, and better communication between IoT devices. Currently IoT devices are generating over 2.5 quintillion bytes of data[lii] every day because smart devices send real-time data to the cloud for processing, analysis, and storage.

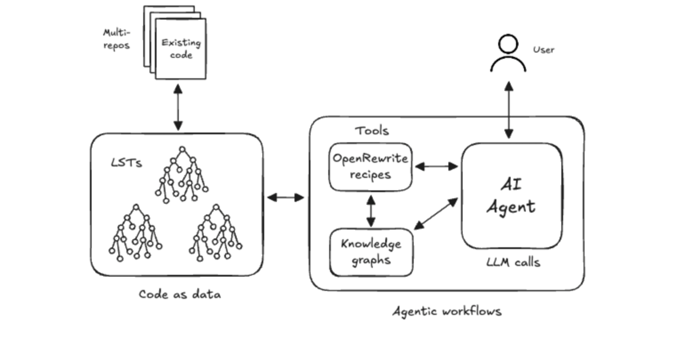

Revolutionizing Application Modernization with Agentic AI

Enterprises face challenges modernizing legacy applications containing decades of critical business logic (e.g., COBOL[liii]). Traditional approaches to modernization require years of effort, costly investment, and business disruption, which have frequently led to modernization initiatives being delayed, leaving businesses with technical debt.

Agentic AI represents the next frontier in AI to plan, reason, and execute complex workflows with minimal human guidance. This autonomous capability is transformative when applied to the complex challenge of application modernization. Leveraging AI agents that analyse codebases and develop modernization strategies accelerates digital transformation. Early adopters are reporting reduced timelines, lower costs, and improved business outcomes, making agentic AI the most promising technology for enterprise modernization.

Source: DevOps.com[liv]

AI agents can rapidly translate cryptic legacy code into clear process descriptions that both technical and business stakeholders can understand, addressing the knowledge gap that exists when documentation is sparse and subject matter experts have gone. In real-world implementations, modernization timelines and costs are reduced by 40 percent to 50 percent[lv]. Agentic AI modernization can support continuous evolution rather than point-in-time migration. The resulting "Augmented Enterprise” strategically integrates autonomous systems to amplify human capabilities, liberating humans from routine and repetitive tasks to work on activities that drive sustainable competitive advantage and evergreen revenue. AI agents convert code but also identify and remediate previously unknown security vulnerabilities, creating a secure modernized system while preserving compliance.

Explainable AI

Regulation and compliance mean explainability is a fundamental requirement when enabling agentic AI, which leads to counterfactual questions as to why decisions were reached. Deep learning neural networks use reinforcement learning to assist with explainability. Causality endows models by fusion to achieve agentic AI prediction, such as early disease detection, giving researchers a 24/7 AI workforce. Counterfactual questions need to be applied for explanation and presented to regulators, EU AI Act enforcers, and for responsible or ethical AI reasons. The human must remain in the loop even though these autonomous agents can make decisions without direct human oversight.

A critical question is: Can agentic AI platforms can be explainable and governed responsibly? Black-box bottlenecks can arise because advanced AI models involve numerous layers of computation and complex interactions that make decisions difficult to track. The traction between accuracy and explainability is a fundamental challenge in the field of AI as the pursuit of high accuracy can overshadow explainability[lvi].

Source: SAS[lvii]

Agentic AI actively participates in critical decision-making processes. Explainability fosters trust from end users and regulators to executives. When an AI-driven recommendation influences medical diagnoses, insurance underwriting, or investment strategies, stakeholders demand transparency not only to assess accuracy but also to validate fairness, compliance, and ethical considerations. Without governance, explainability alone is not enough. Counterfactual explanations are key to intervention of agentic AI models.

Causality and Explanation

It is not surprising that causality is an answer to explainability. Traditional neural networks, which power most AI platforms today, are trained on large datasets containing examples of both occurrences and non-occurrences of an event. The model then identifies correlations in the data to make predictions. However, this approach has two major limitations:

- If the true cause of an outcome isn't in the data, the model can only approximate a solution by overfitting additional variables.

- The reasoning behind its predictions is an opaque “black box,” making it difficult to validate or improve without simply adding more data.

While this method can work well in certain contexts, such as content recommendation systems like Netflix where the data variables are numerous and loosely related, it poses significant risks in domains like healthcare, where decisions must be explainable and reliable. Causality builds interpretable indexes designed to reflect how a human expert would make decisions. Rather than relying on opaque correlations, models follow structured, transparent logic. When identifying outliers, the criteria are explicitly defined rather than leaving it to the model to infer them. This results in a system that mimics human reasoning, ensuring clarity, traceability, and accuracy at every step.

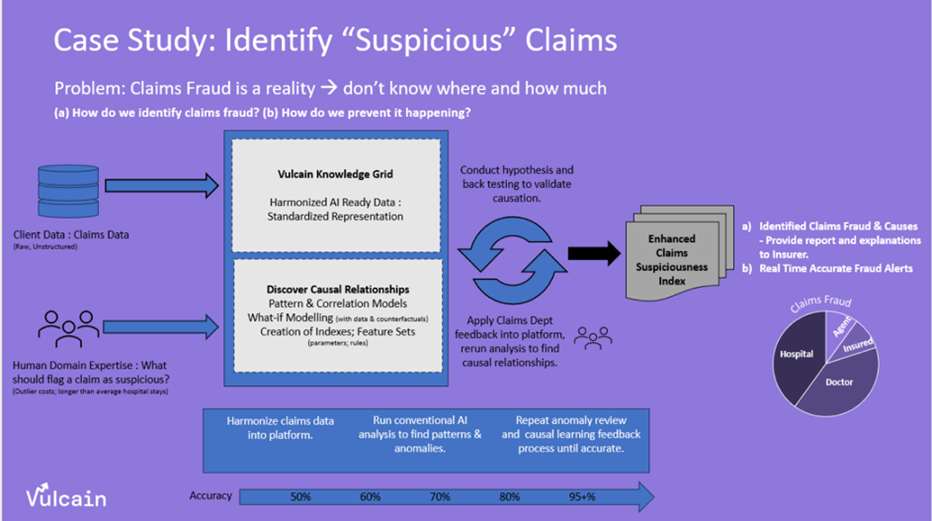

In the insurance claim fraud case study below, numerous indexes were created for all the items related to the claim and risk scores applied. Then those individual causal factors are used to create the overall index used.

Source: Eumonics

If a claim is marked suspicious, then the causes are explained via multiple sub-indexes as defined in the domain expertise feedback process and assigned a risk score on each index so users can see all risk scores associated with the causal drivers for a claim. Causality enriches claims data with external sources such as pollen registries, air quality indexes, climate patterns, and epidemiological alerts. This engineers causal indexes, like environmental exposure and seasonal respiratory risk, which measure true drivers rather than surface-level correlations.

By collaborating with human experts, causal relationships are validated and ensure relevance. This delivers transparent, explainable outputs that identify the direction, magnitude, and duration of each contributing factor. This enables users to forecast claim pressures, identify high-risk regions, and make data-driven decisions around policy and pricing strategy. Data integrity is a key process as data needs provenance and proof it has not been compromised.

Multi-Agentic AI Systems (MAS)

Multi-agentic AI systems (MAS) involve multiple AI agents working in unison or independently to achieve a common goal, and are effective in scenarios requiring advanced coordination, scalability, and adaptability. In industries like supply chain management, healthcare, and autonomous vehicles, MAS is being used to optimize operations and enhance decision-making.

In agentic MAS, a central master agent manages and directs the activities of other AI agents to achieve overarching goals. The master agent orchestrates actions, allocates resources, and makes high-level decisions. To carry out its tasks effectively, the coordinating agent can use tools, models, or other agents that are either domain-oriented or task-oriented in complementary ways, ensuring that the system can both understand the context and execute specific actions to accomplish the desired outcomes. For example, a financial forecasting agent might pass a proposed budget to a compliance agent for regulatory validation, resulting in more robust decisions than any single agent could achieve alone.

Source: Medium

The MAS model also provides a pricing shift into evergreen revenue. As AI agents run continuously, learning and interacting 24/7, usage-based pricing where customers pay only for what they use can be invoked, and vendors can share in the gains or savings, based on successful outcomes. Balancing usage costs and accurate tools with the right ROI is critical for sustainable growth and maximizing top line.

Accenture has developed the agentic Snowflake platform[lviii] which created an agent to do a specific task as an insurance claim agent automating part of the claims process and generating highly accurate SQL. The AI agent reviews documents, summarizes information, makes claims decisions, and generates personalized claims letters to clients, explaining the reasoning behind an approval or denial and keeping the human in the loop.

Microsoft developed Azure Databricks[lix] as an open analytics platform for building, deploying, sharing, and maintaining enterprise-grade data, analytics, and AI solutions at scale integrating and deploying with cloud computing infrastructure. Data platform layers such as Snowflake and Databricks added a harmonization layer which then attaches multiple agents, agentic operation, and an orchestration module where agents work in concert, guided by those top-down objectives, but executing a bottom-up plan.

Large Action Models (LAM)/Small Action Models (SAM)

An AI agent is an AI model encased in operational logic and empowered with action capabilities, hence a need to utilise large action models (LAM) While LLM’s main function is generating text, a LAM performs actions with user instructions and is the foundation of agentic AI acting like humans by analysing data and acting based upon it.

A LAM can automate scheduling and management of records. AI agents are backed by well-designed LAM and in reality are small models wired together, maximising infusion of knowledge by combining domain-specific workflows and tasks as a causal component model where one change cascades through the rest as a causal neural network, through microservices that understand causality mechanisms, and why things happen.

Decisions cannot be made without cause and effect. Most AI agents are horizontal, designed for broad, cross-functional applications as operating systems for organizational workflows. However, specialised verticalization is required by industry sectors. AI agents build on this potential by accessing diverse data through accelerated AI query engines, which process, store, and retrieve information to enhance GenAI models.

The RAG technique allows AI to intelligently retrieve the right information from a broad range of data sources. Enterprise value creation is shifting from LLMs to SLMs which then evolve to become agentic small action models (SAMs). It Is the collection of these SAM, combined with the data harmonization and semantic layer, which enable clusters of agents to work in concert and create high-impact business outcomes. Pushing beyond the static workflows of robotic process automation (RPA) there is a transitioning from RPA to autonomous AI agents that understand language, orchestrate complex operations, and dynamically optimize enterprise automation with minimal oversight.

Agentic AI and Cybersecurity

Agentic AI is a cornerstone of cybersecurity as it operates without human input, acting autonomously to detect threats, mitigate risks, and adapt to new forms of cyberattacks. The Cyber Security Tribe’s Annual Report [lx] shows adoption of agentic AI in cybersecurity in 2025. By taking autonomous action to protect systems and users’ security, decisions can be made in milliseconds. Pattern recognition across thousands of data points simultaneously allows real-time adaptation to fraud techniques, autonomous detection of behavioural inconsistencies, and differentiation between human- and AI-generated content such as deepfake videos. The approach uses multiple AI models working in concert, each specialized for different aspects of verification, including demographic bias[lxi].

Verified identity is a foundation of secure messaging, especially P2P communications networks, where users can verify the identity of anyone who invites to connect, including network devices. Privacy must be preserved with strong end-to-end encryption and verification embedded in communication platforms for people and devices to prove their identity.

By establishing a baseline of normal behaviour within a network, agentic AI flags deviations indicating a potential breach, such as unauthorized access or unusual patterns of data usage that enable the system to take action autonomously, such as isolating a compromised device or restricting access to sensitive data.Anomaly detection can be addressed by detecting previously unknown threats by recognizing abnormal patterns in network traffic or user behaviour. This allows agentic AI to identify and neutralize zero-day vulnerabilities, phishing attacks, or malware not yet catalogued by conventional security tools.

If an agentic AI system makes an incorrect decision that leads to data breach or network compromise, accountability is the question, as an AI system could mistakenly flag legitimate activity as malicious, leading to unnecessary disruptions in service or unwarranted disciplinary actions.Agentic AI is powerful in cybersecurity defence and has offence applications.

Cybercriminals are actioning AI-driven attacks that independently adapt and evolve in real time. These autonomous attacks use agentic AI to bypass traditional security defences, evade detection, and exploit vulnerabilities with little to no human oversight. AI-powered malware can autonomously scan networks, identify weak points, and launch targeted attacks without human intervention. These malicious agents can modify their code to avoid detection by antivirus software or firewalls, making them harder to defend against. So as both attackers and defenders begin to harness the power of autonomous agentic AI, the cybersecurity landscape will continually change and adapt.

As agentic AI becomes more prevalent in cybersecurity, there is a need for clear regulations and policies to govern its use so that agentic AI systems can provide clear, understandable explanations for their decisions. Liability laws will require updating to account for the autonomous nature of an agentic AI, determining who is responsible for the actions of an AI system, whether it’s the developer, the business deploying the system, or the AI itself. This is a nonrepudiation issue that will need to be resolved as agentic AI becomes mainstream.

Quantum Computing and Agentic AI

Quantum computing leverages the principles of quantum mechanics to solve problems beyond the reach of classical computers.[lxii] The complexity of quantum systems demands advanced tools for optimization, simulation, and problem-solving. AI agents, with their ability to learn, adapt, and manage vast amounts of data, are emerging as essential collaborators in the quantum computing landscape and strategic and essential partners in the advancement of the science.

The intersection of agentic AI and quantum computing enables the use of reinforcement learning to optimize quantum algorithms and maximize performance.AI agents manage the computational resources of quantum systems, ensuring efficient utilization, optimization, and the allocation of quantum processors to prioritize high-value computations. Simulating quantum systems on classical computers is computationally intensive. AI agents accelerate simulations by reducing computational overhead, learning from data to improve predictions of quantum behaviour.

AI-driven quantum tools simulate molecular interactions, aiding drug discovery and material design. Quantum simulations, enhanced by AI, predict environmental changes with high accuracy. Quantum computing challenges traditional encryption, but AI agents develop quantum-safe cryptographic methods, designing encryption systems resistant to quantum attacks and optimizing the generation of quantum keys for secure communication. AI agents in quantum computing improve machine learning models by leveraging quantum speed-ups, giving rise to quantum neural networks and quantum-enhanced AI agents, which will evolve into autonomous researchers capable of independently discovering quantum algorithms and insights, making them more practical for everyday use.

Integrated ecosystems are emerging where AI and quantum computing seamlessly collaborate across industries. AI agents will bridge the gap between quantum computing and other fields, such as biology, engineering, and finance. Machine learning fusion models refer to approaches combining multiple models or data sources to create a more accurate or robust system. The idea behind fusion is to integrate different types of information, models, or methodologies to improve the overall performance of a machine learning system (ensemble models[lxiii].

Future and Conclusions

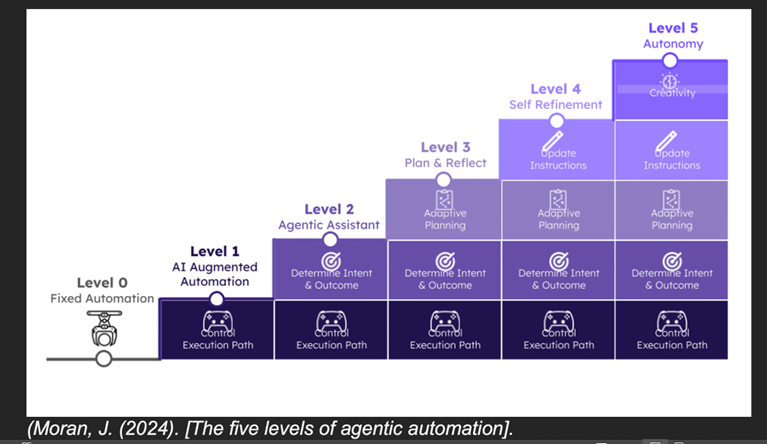

Agentic AI is a transformative business model emerging from the exponential AI era. What started with simple digital assistants has now evolved into a new business model with a digital workforce capable of making decisions and adapting in real time to achieve specific goals. The raison d’etre of agentic AI is to build completely autonomous systems that can understand and manage complex workflows and tasks with minimal human intervention. Agentic systems can grasp nuanced concepts, set and pursue goals, reason through tasks, and adapt their actions based on changing conditions. These systems can consist of a single agent or multiple agents. The chart below shows the path to agent autonomy[lxiv]. However, caveat emptor as the human must stay in the loop within the autonomy phase.

The advent of GenAI and LLM catalysed an acceleration of AI adoption among enterprises, representing the highest spending trajectory among all technology sectors. Businesses now envision applying advanced, mature causal AI techniques to complement GenAI, empowering a transformative, agentic AI model translating to the capturing, storage, and reuse of knowledge. This can manage and complete tasks independently, adapting its approach based on its interactions and learnings, collaborating with other digital systems and AI agents to achieve complex goals and acting as a proxy for human users.

Recent advances in “causal reasoning models” have convinced some observers that GenAI has reached human-level ability, known as artificial general intelligence (AGI). There is no evidence to show that is the case and it is early days before an AI agent is left to make autonomous decisions without the human in the loop. Researchers are developing new tools that allow them to look inside these models. The results leave many questions that they are anywhere close to AGI in the near future, if ever, and there are many governance guardrail issues to be faced.

Humans are by nature causal. So causal reasoning becomes a critical ingredient to fill that agentic AI gap identified by Gartner to infuse tacit knowledge to understand why certain things happen, given certain conditions. This outlines what can be done, what should be done, how to do it, and the best path for doing it, plus being able to actually explain it.

AI today is more pervasive and integrated into our environments than visible robots, but as an invisible network in smart devices. AI is emerging as an operating system (AI OS), a step beyond AI integration, by connecting devices and communicating between them without interference. AI OS incorporates capabilities such as real-time processing, distributed computing, edge AI integration, and enhanced security protocols to facilitate efficient AI-driven applications. AI OS are particularly relevant in domains that require adaptive learning and autonomous decision-making by integrating AI capabilities at their core so they can learn, adapt, and improve over time based on user interactions and data inputs. When all devices are linked together by an AI OS, the network truly becomes the computer. Traditional operating systems, such as Windows, macOS, or Linux, provide a basic framework for managing hardware and running applications but rely on predefined rules and algorithms to perform tasks and do not use AI to complete or improve these tasks.

The Fourth Industrial Revolution continues unabated, spanning from the dot-com boom, Web 2.0, mobile, IoT, data, cloud, SaaS, Web 3.0, and now AI. Agentic automation looks poised to create a profound transformation, one that will upend not only how software is built and deployed, but how it’s delivered, monetized, and integrated into enterprise workflows. The aim is to use reinforcement learning to achieve state space which is endowing on the AI agent a full set of passible situations (states) that can be encountered in its environment using a mathematical model of computation. This has synergies with quantum computing as thousands of states can be shared by all instances of applications.

Agentic AI is achieved through microservice architectures incorporating correlative and causal-based AI reasoning methods using a network of LLM/LAM and domain-optimized SLM/SAM. It creates agents that can collaborate, assess the impact of different actions, and fully explain their decisions and reasoning. Most importantly, they should engage with humans to gain domain-specific knowledge and tacit know-how to help achieve desired outcomes. A key milestone is to make AI initiatives self-funding to achieve ROI/payback.

Cybersecurity will be reformed by agentic AI from the aspects of automated threat detection, automated incident response, and predictive analysis. Reaching autonomy is the outstanding timeframe question and how to mitigate the risks of AI[lxv] when that point is achieved. Causality and application of human reasoning into the subfields of AI goes a long way to addressing these risks, keeping the human in the loop and being able to use AI agents to ask counterfactual questions in the same manner as human thinking. The insurance industry will be impacted by this transformation and needs to prepare now.

Organisations will enhance customer experiences, efficiency gains and be able to shape business outcomes to be more positive. Human oversight will be more curation and strategic as the AI takes over the heavy lifting at the operational level. Adopting agentic AI strategies is not an option and competitive advantage will be gained by early adopters.

References

[i] https://medium.com/@divine_inner_voice/the-drawbacks-of-linear-regression-models-limitations-and-their-impact-on-accuracy-675853072a3

[ii] https://www.tableau.com/analytics/what-is-time-series-analysis

[iii] https://www.pwc.com/m1/en/publications/documents/2024/agentic-ai-the-new-frontier-in-genai-an-executive-playbook.pdf

[iv] https://en.wikipedia.org/wiki/Deep_learning

[v]https://english.unl.edu/sbehrendt/StudyQuestions/ContextualAnalysis.html#:~:text=A%20contextual%20analysis%20is%20simply,the%20text%20as%20a%20text.

[vi] https://seantrott.substack.com/p/mechanistic-interpretability-for

[vii] https://arxiv.org/abs/2410.21272

[viii] https://www.virtuosoqa.com/

[ix] https://www.gartner.com/en/articles/intelligent-agent-in-ai

[x]https://en.wikipedia.org/wiki/Reinforcement_learning#:~:text=Reinforcement%20learning%20(RL)%20is%20an,to%20maximize%20a%20reward%20signal.

[xii] https://en.wikipedia.org/wiki/Computational_linguistics

[xiii] https://library.fiveable.me/key-terms/introduction-cognitive-science/cognitive-augmentation

[xiv] https://www.tellius.com/resources/blog/is-a-semantic-layer-necessary-for-enterprise-grade-ai-agents

[xv] https://aibizflywheel.substack.com/p/causality-in-agentic-ai

[xvi] https://www.businesswire.com/news/home/20250108935586/en/Causal-AI-Research-Forecast-Report-2024-2030-Market-to-Reach-%24456-Million-by-2030-Growing-at-a-CAGR-of-41.8-Driven-by-Demand-from-Healthcare-Finance-and-Autonomous-Vehicles---ResearchAndMarkets.com#:~:text=It%20is%20anticipated%20that%20the,41.8%25%20throughout%20the%20forecast%20period.

[xvii] https://pages.dataiku.com/ai-today-in-fsi

[xviii] https://arxiv.org/pdf/2410.05229

[xvix] https://vulcain.ai/

[xx] https://aws.amazon.com/what-is/sql/

[xxi] https://www.faistgroup.com/news/global-ai-market-2030/

[xxii] https://en.wikipedia.org/wiki/Transformer_(deep_learning_architecture)

[xxiii] https://thecuberesearch.com/

[xxiv] https://www.promptingguide.ai/techniques/cot

[xxv] https://openreview.net/pdf?id=_3ELRdg2sgI

[xxvi] https://web.cs.ucla.edu/~kaoru/3-layer-causal-hierarchy.pdf

[xxvii] https://www.prnewswire.com/news-releases/artificial-intelligence-ai-market-worth-1-339-1-billion-by-2030--exclusive-report-by-marketsandmarkets-302166982.html

[xxviii] https://market.us/report/agentic-ai-market/#:~:text=The%20Global%20Agentic%20AI%20Market,period%20from%202025%20to%202034.

[xxvix] https://market.us/report/agentic-ai-market/#:~:text=In%202024%2C%20North%20America%20held,2024%20with%20CAGR%20of%2043.6%25.

[xxx] https://www.atera.com/blog/agentic-ai-stats/

[xxxi] https://research.google/blog/google-duplex-an-ai-system-for-accomplishing-real-world-tasks-over-the-phone/

[xxxii] https://www.ibm.com/products/watsonx-assistant/healthcare

[xxxiii] https://www.mayoclinic.org/

[xxxiv] https://learn.microsoft.com/en-us/azure/bot-service/?view=azure-bot-service-4.0

[xxxv] https: https://www.pega.com/technology/agent-experience//www.digitalcommerce360.com/2025/03/04/amazon-alexa-plus-features-agentic-ai-ecommerce/

[xxxvi] https://www.pega.com/

[xxxvii] https://artificialintelligenceact.eu/

[xxxviii] https://en.wikipedia.org/wiki/DeepSeek

[xxxix] https://openai.com/index/announcing-the-stargate-project/

[xl] https://www.forbes.com/councils/forbestechcouncil/2024/12/19/how-to-deploy-production-ready-ai-agents-that-drive-real-business-value/

[xli] https://market.us/report/agentic-ai-market/#:~:text=Product%20Type%20Analysis-,In%202024%2C%20the%20Ready%2DTo%2DDeploy%20Agents%20segment%20held,scalable%20AI%20solutions%20across%20industries.

[xlii] https://yndtec.org/smart-mga/

[xliii] https://www.energymonitor.ai/sustainable-finance/why-anticipatory-insurance-is-the-next-frontier-for-climate-aid/

[xliv] https://openinsuranceobs.com/wp-content/uploads/2024/03/Open-Embedded-Insurance-Report-2024.pdf

[xlv] https://www.pwc.com/bm/en/press-releases/insurance-in-2025-and-beyond.html

[xlvi] https://www.ey.com/en_us/insights/insurance/how-insurers-and-new-entrants-can-take-advantage-of-embedded-ins

[xlvii] https://trustoverip.org/

[xlviii] https://en.wikipedia.org/wiki/The_Network_is_the_Computer

[xlix] https://blogs.nvidia.com/blog/what-is-edge-ai/

[l] https://www.linkedin.com/pulse/industrial-iot-agentic-ai-agents-physical-revolution-2025-bottacci-bnp1f/

[li] https://speechify.com/blog/what-is-narrative-ai/?srsltid=AfmBOopEKDU6_Q3r8l1JAq9a1sbU9S7xWcrjeFFigwMco2kntHnyc_nF

[lii]https://www.sciencedirect.com/science/article/abs/pii/S0952197622003803#:~:text=IoT%20is%20expanding%20at%20a,et%20al.%2C%202021).

[liii] https://en.wikipedia.org/wiki/COBOL#:~:text=COBOL%20(%2Fˈkoʊb,language%20designed%20for%20business%20use.

[liv] https://devops.com/data-determinism-and-ai-in-mass-scale-code-modernization/?utm_source=rss&utm_medium=rss&utm_campaign=data-determinism-and-ai-in-mass-scale-code-modernization

[lv] https://www.linkedin.com/pulse/revolutionizing-application-modernization-agentic-ai-amahl-williams-dp7uf/

[lvi] https://www.linkedin.com/pulse/quandary-model-interpretability-bridging-gap-between-iain-brown-ph-d--yckue/

[lvii] https://blogs.sas.com/content/sascom/2025/03/24/beyond-the-black-box-how-agentic-ai-is-redefining-explainability/

[lviii] https://www.snowflake.com/en/blog/agentic-ai-financial-services-insurance/

[lix] https://www.databricks.com/

[lx] https://www.cybersecuritytribe.com/annual-report

[lxi] https://www.pragmaticinstitute.com/resources/articles/data/consequences-of-demographic-bias/#:~:text=What%20is%20Demographic%20Bias%3F,considerably%20more%20men%20than%20women.

[lxii] https://www.internationalinsurance.org/insights_quantum_technologies_cybersecurity_and_the_change_ahead

[lxiii] https://builtin.com/machine-learning/ensemble-model#:~:text=What%20Are%20Ensemble%20Models%3F,of%20building%20a%20single%20estimator.

[lxiv] https://sema4.ai/blog/the-five-levels-of-agentic-automation/

[lxv] https://builtin.com/artificial-intelligence/risks-of-artificial-intelligence

6.2025